Can You Upload Zip to S3 and Unzip

Extract Nil Files From and Back to the S3 Saucepan using Node.js

A lambda role to unzip files from an S3 bucket.

I have been wondering how to upload multiple files from the customer-side of my web awarding for a couple of days now. And I found a similar question on StackOverflow only the accepted answer was implemented using the AWS CLI. I can certainly implement this using the CLI through kid processes using Node.js in the backend. Merely surely at that place exist a improve and faster approach than this.

Another article was talking well-nigh uploading using hope.all but that is certainly not an option on the client-side. I besides institute some implementation using python and boto3 but my app was written in Node.js and then I tin can't afford to add another linguistic communication to my dependency list. Then I searched for more on this topic and I found this article written by Akmal Sharipov. Surely, his article was helpful but I faced some problems using this library (might be my mistake how I implemented it).

Later some earthworks, I found that the structure of the zip file has its Central Directory located at the last of the file, and there exist local headers that are a copy of the Cardinal Directory just are highly unreliable. And the read methods of most other streaming libraries buffers the unabridged zip file in the retention thus, defeating the unabridged purpose of streaming it in the first place. So here's another algorithm I came up with using the yauzl library which he mentioned in his article as well.

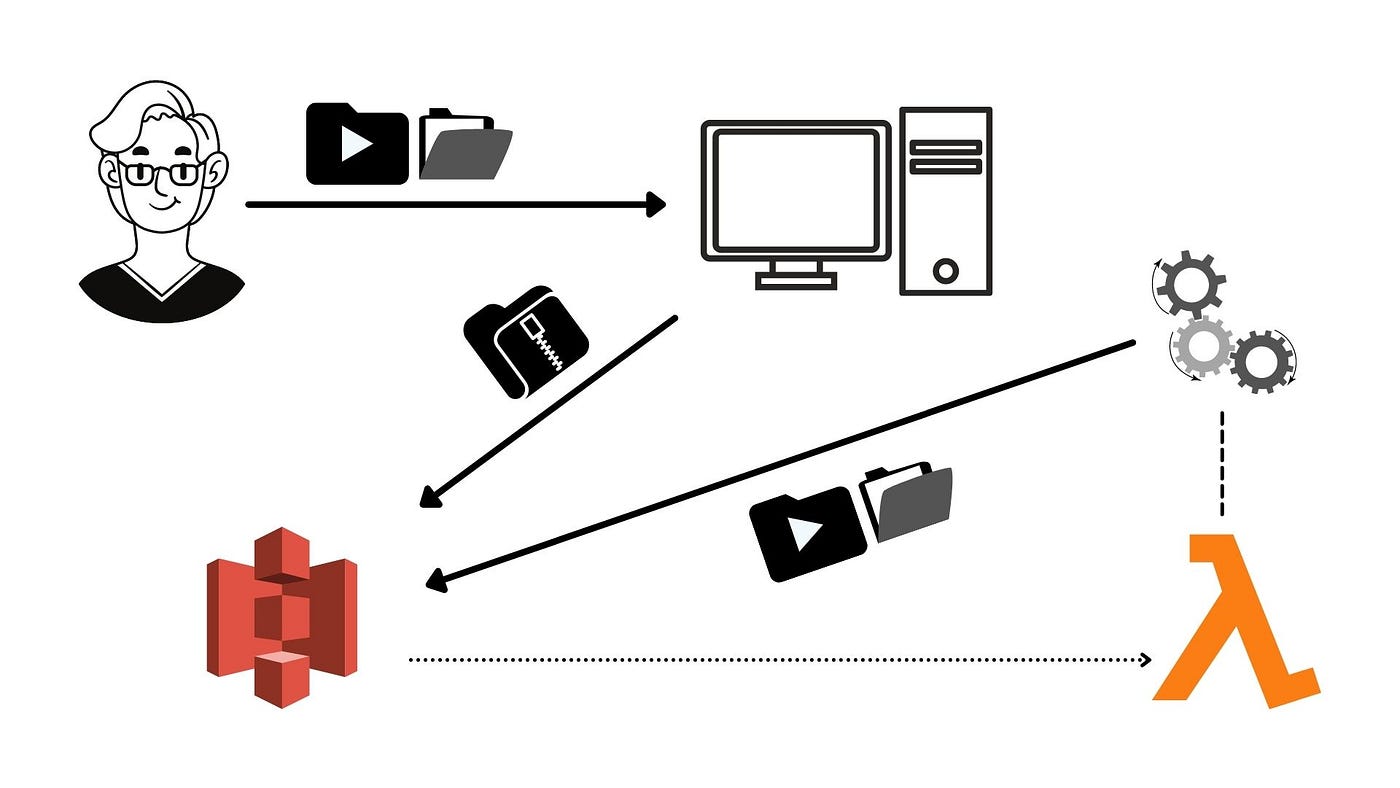

- The user uploads lots of files through my app.

- My app zips those files using the yazl library and uploads information technology in an S3 saucepan on the client-side.

- An S3 'put' issue triggers the lambda function.

- Lambda function pulls the whole object (zip file)into its retentiveness buffer.

- It reads one entry and uploads it back to S3.

- When the upload finishes, it proceeds to the next entry and repeats footstep five.

This algorithm never hits the RAM limit of the lambda function. The max memory usage was under 500MB for extracting a 254MB aught file containing two.24GB of files.

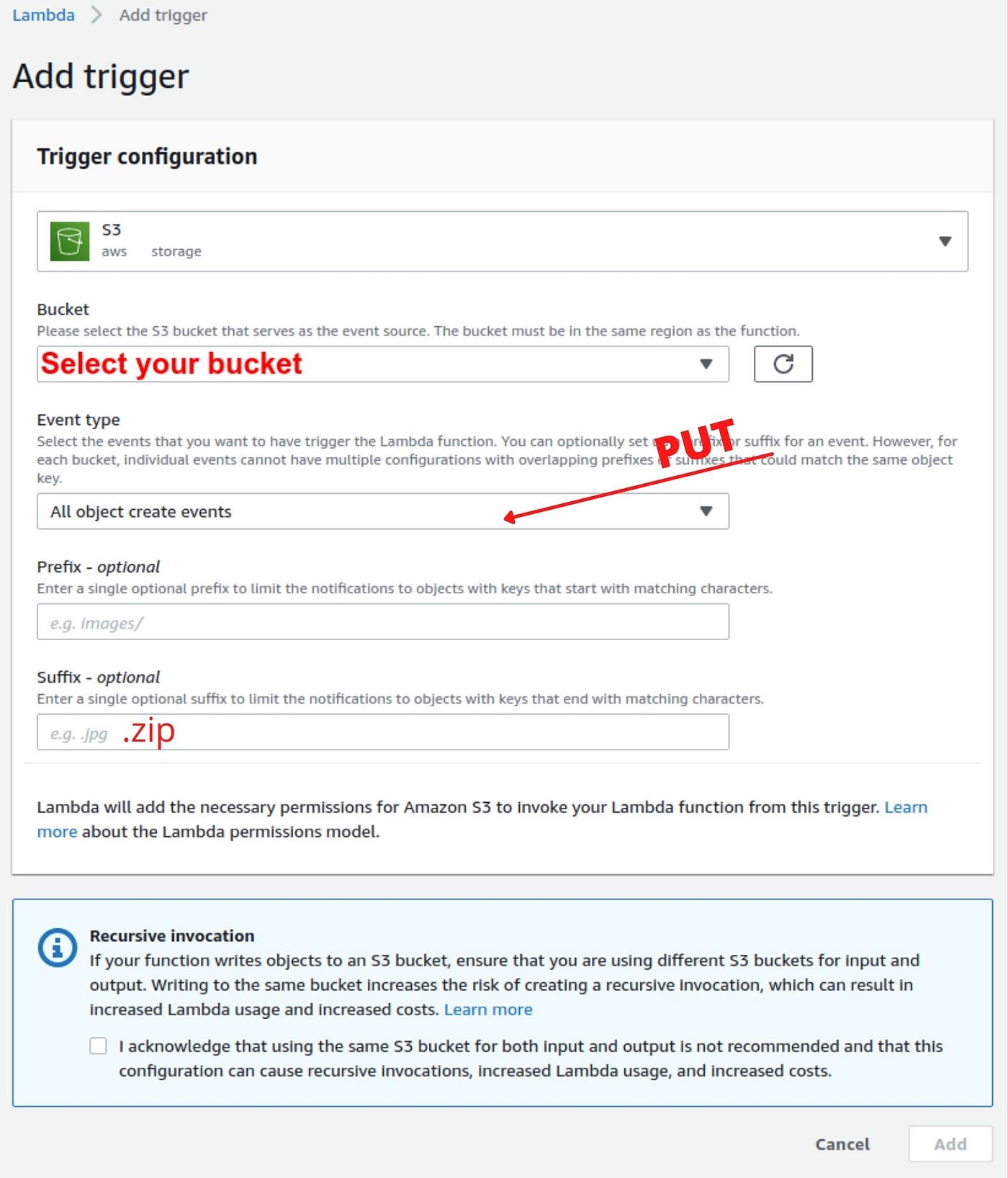

Create a Lambda part and add together an S3 trigger

Before creating the lambda function, create an IAM office with full S3 and lambda permissions. Now go to the lambda services department and then click on the 'Create Role' button on the top right corner. On the next page, select 'Writer from scratch' and requite a name for the role. In the Permissions department, select 'use an existing role' and choose a role that yous defined earlier — you tin e'er change the permission afterwards. Then click on the 'Create Function' button on the bottom right corner of the page.

Configure the Lambda function such that it'll be triggered whenever a zip file is uploaded to the S3 bucket. Click on the 'add together trigger' push button on the Function overview section and select an S3 consequence from the dropdown. Then choose your bucket and select 'PUT' equally the result type and too don't forget to add '.null' in the suffix field or it'll cocky invoke the role in a loop. Then click on 'add' to add the trigger on the lambda function.

Lambda function to fetch objects from the S3 bucket

We will utilize the AWS SDK to get the file from the S3 bucket. Inside the event object of the handler function, we have the Records array that contains the S3 bucket proper noun and the key of the object that triggered this lambda function. We will use the Records array to become the saucepan and the key for the S3.getObject(params, [callback]) method to fetch the zip file.

Upload object to S3 saucepan from a stream

This is the chief piece of the code that was a picayune tricky. Apparently, passing the readable stream directly to the Torso property of the params parameter doesn't work at least in the lambda environment. So I searched in GOOGLE — equally I do usually — and I found this StackOverflow question which fits my requirement perfectly. Here's the code.

To use this role we need to call the part first then pipe the readable stream to writeStream property returned past it.

uploadStream function?Unzip the object and upload each entry back to S3

Yauzl library is the about pop library in the npm registry. This library is besides very reliable and I don't want my app to crash for some silly library issue. The documentation is very user-friendly as well. I'll employ the fromBuffer method as the zero file volition be bachelor to us via the buffer only. Let's dive into the code for unzipping the file.

This office volition read i entry from the zip file and so upload it back to the S3 bucket using the uploadStream role we implemented earlier. Afterward the upload of one entry/file has finished, then just information technology will read the side by side entry and upload it and the loop goes on until the end of the zip file is encountered. It resolves the promise with the 'end' message when at that place'due south nothing left in the nix file.

Putting information technology all together

The entire code is available in the above repository. You just demand to clone the repository and then run npm install followed by npm run zilch. So upload the lambda.nada file in the lambda's code section of the console.

Don't worry if you don't want to clone my repository, you can paste all the functions I shared inside the index.js file and replace console.log(object) at line 15 of the 'exports.handler' with this line const outcome = expect extractZip(Saucepan, object.Body);

.

Concluding Words

I only implemented the algorithm a little differently than the other implementation I discussed before. My approach was to not stream the zip file merely to stream the entries 1 at a time. Just all the other algorithms are smashing as well.

If you observe this article helpful, make sure to exit a clap for me. And here's another article yous might like.

Happy hacking!

More content at plainenglish.io

Source: https://aws.plainenglish.io/extract-zip-files-from-and-back-to-the-s3-bucket-using-node-js-f19f009ace22

0 Response to "Can You Upload Zip to S3 and Unzip"

Post a Comment